In today’s dynamic landscape of AI innovation, Snowflake introduces an array of cutting-edge solutions, including Snowflake Cortex and Snowpark Container Services. These offerings empower organizations to seamlessly integrate generative AI, enabling swift analysis of data and the development of sophisticated AI applications within a secure and governed environment.

Table of Contents

Snowflake Vector Database: Revolutionizing Work with Generative AI and LLMs

Generative AI (GenAI) and large language models (LLMs) are transforming the global work landscape. Snowflake is thrilled to introduce an innovative product lineup that leverages the platform’s user-friendliness, security, and governance for the GenAI world. These new offerings enable users to integrate LLMs into analytical processes in seconds, allow developers to create GenAI-powered apps within minutes, and execute powerful workflows, like fine-tuning foundation models on enterprise data, all within Snowflake’s secure perimeter. These advancements empower developers and analysts of all skill levels to bring GenAI to securely governed enterprise data.

Exploring Snowflake Cortex and Snowpark Container Services

Snowflake provides two foundational components to help customers securely integrate generative AI with their governed data:

Snowflake Cortex

Snowflake Cortex, currently in private preview, is an intelligent, fully managed service offering access to industry-leading AI models, LLMs, and vector search functionality. It enables organizations to swiftly analyze data and build AI applications. Cortex provides users with serverless functions for inference on top-tier generative LLMs, such as Meta AI’s Llama 2 model, task-specific models to accelerate analytics, and advanced vector search capabilities.

Not only for developers, Snowflake Cortex also powers LLM-enhanced experiences with full-fledged user interfaces, including Document AI, Snowflake Copilot, and Universal Search, all in private preview.

Snowpark Container Services

Soon available in public preview in select AWS regions, Snowpark Container Services is an additional Snowpark runtime that allows developers to effortlessly deploy, manage, and scale custom containerized workloads and models. This includes tasks like fine-tuning open-source LLMs using Snowflake-managed infrastructure with GPU instances, all within the secure boundary of their Snowflake account.

Leveraging AI with Ease and Security

Quick Access to AI for All Users

To democratize AI access and expand adoption beyond experts, Snowflake offers innovations for users to leverage cutting-edge LLMs without custom integrations or front-end development. This includes complete UI-based experiences, such as Snowflake Copilot, and access to LLM-based SQL and Python functions that accelerate analytics with specialized and general-purpose models available through Snowflake Cortex.

Rapid LLM App Development

Developers can build LLM apps that understand their business and data nuances in minutes, without integrations, manual LLM deployment, or GPU infrastructure management. Using Snowflake Cortex’s Retrieval Augmented Generation (RAG) capabilities, developers can create customized LLM applications within Snowflake.

Core Functions of Snowflake Cortex

As a fully managed service, Snowflake Cortex provides the essential building blocks for LLM app development without complex infrastructure management. This includes general-purpose functions that utilize leading open-source LLMs and high-performance proprietary LLMs, supporting various use cases.

Initial models in private preview include:

- Complete: Users can pass a prompt and select the LLM they want to use, choosing between different sizes of Llama 2.

- Text to SQL: Generates SQL from natural language using the same LLM that powers Snowflake Copilot.

Additional functions in private preview soon include:

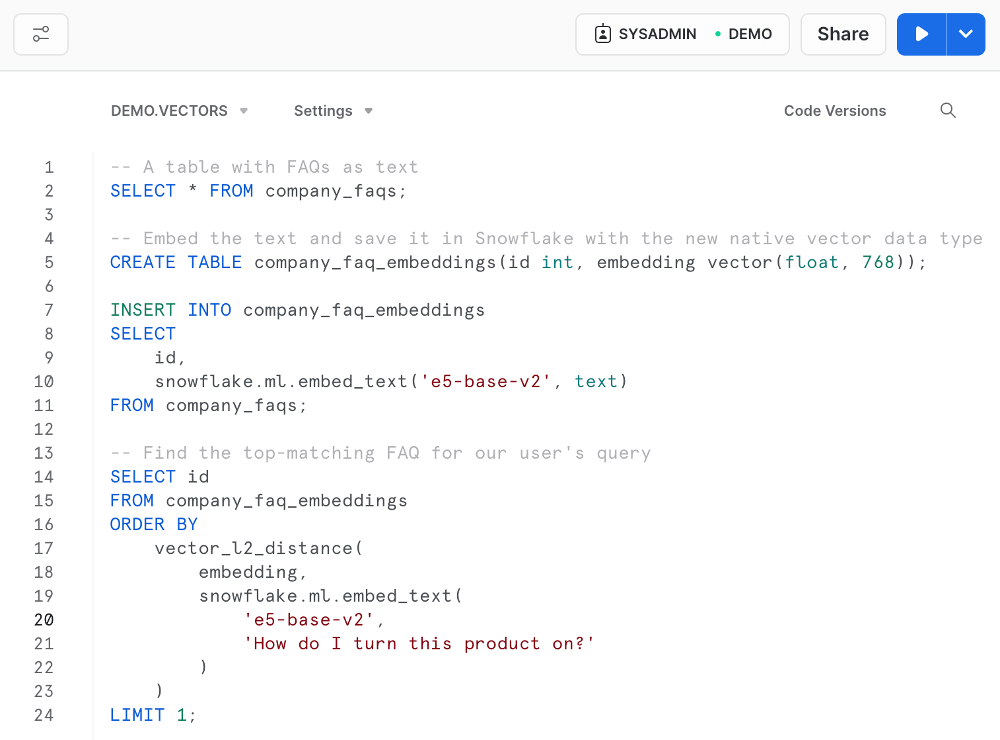

- Embed Text: Transforms text input to vector embeddings using a selected embedding model.

- Vector Distance: Calculates distance between vectors using functions like cosine similarity, L2 norm, and inner product.

- Native Vector Data Type: Supports vector data as a native type in Snowflake.

Streamlit in Snowflake

Streamlit, in public preview, allows teams to quickly create LLM apps with minimal Python code and no front-end experience required. These apps can be securely deployed and shared across an organization using Snowflake’s existing role-based access controls, with unique URLs generated with a single click.

Customizing LLM Applications with Snowpark Container Services

Snowpark Container Services, in public preview soon in select AWS regions, provides developers with the flexibility to build and deploy custom containerized workloads in Snowflake. This includes fine-tuning open-source LLMs, running vector databases, and developing custom user interfaces with frameworks like ReactJS, all within Snowflake’s secure boundary.

Getting Started with Snowflake’s AI and LLM Tools

Snowflake makes it easy for users to quickly and securely harness the power of LLMs and AI with their enterprise data. Whether you want immediate AI integration or the flexibility to build custom LLM apps, Snowflake Cortex, Streamlit, and Snowpark Container Services provide the necessary tools without requiring data to be moved outside Snowflake’s secure environment. For more information, visit the Snowflake website and stay updated on the latest developments.

Also read:

The Top 5 Best Vector Database

Information in Table format

| Feature | Description |

|---|---|

| Snowflake Cortex | Snowflake Cortex is an intelligent, fully managed service available in private preview. It provides access to top-tier AI models, LLMs, and vector search functionality, empowering organizations to analyze data and build AI applications efficiently. |

| Snowpark Container Services | Snowpark Container Services, soon available in public preview in select AWS regions, streamlines the deployment, management, and scaling of custom containerized workloads and models within Snowflake’s secure environment. |

| Instant Access to AI Capabilities | Snowflake democratizes access to enterprise data and AI through user-friendly experiences. Users can utilize cutting-edge LLMs without custom integrations or front-end development, enhancing analytics efficiency and lowering costs. |

| Rapid Development of LLM Apps | Developers can quickly build LLM apps tailored to their data and business needs with Snowflake Cortex Functions. These tools include complete model access and text-to-SQL generation, accelerating app development without complex integrations or manual deployment. |

| Streamlit Integration | Streamlit integration allows teams to develop LLM apps with minimal Python code, eliminating the need for front-end expertise. Apps can be securely deployed and shared via unique URLs, leveraging Snowflake’s access controls. |

| Snowpark Container Services (Customization) | Snowpark Container Services enable developers to deploy and manage custom UIs and fine-tune open-source LLMs within Snowflake’s secure environment. It provides flexibility for integrating commercial apps, notebooks, and advanced LLMOps tooling seamlessly. |

Join Our Whatsapp Group

Join Telegram group

Frequently Asked Questions

What is Snowflake Cortex?

Snowflake Cortex is an intelligent, fully managed service that offers access to top-tier AI models, LLMs, and vector search functionality. It empowers organizations to swiftly analyze data and build AI applications efficiently within a secure environment.

What are the key features of Snowpark Container Services?

Snowpark Container Services streamline the deployment, management, and scaling of custom containerized workloads and models within Snowflake’s secure environment. It enables developers to fine-tune open-source LLMs and deploy custom UIs, enhancing customization and flexibility.

How does Snowflake democratize access to AI capabilities?

Snowflake provides user-friendly experiences, allowing users to leverage cutting-edge LLMs without custom integrations or front-end development. This democratizes access to enterprise data and AI, enhancing analytics efficiency and lowering costs.

Join Our Whatsapp Group

Join Telegram group

What are the benefits of Streamlit integration?

Streamlit integration enables teams to develop LLM apps with minimal Python code, eliminating the need for front-end expertise. Apps can be securely deployed and shared via unique URLs, leveraging Snowflake’s access controls for seamless collaboration.

How does Snowpark Container Services facilitate customization?

Snowpark Container Services enable developers to deploy and manage custom UIs and fine-tune open-source LLMs within Snowflake’s secure environment. This provides flexibility for integrating commercial apps, notebooks, and advanced LLMOps tooling seamlessly.

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Your point of view caught my eye and was very interesting. Thanks. I have a question for you.

Your article helped me a lot, is there any more related content? Thanks!